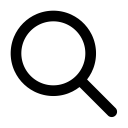

Research suggests misinformation on climate change growing rampant on Facebook

Facebook groups and pages are filled with climate misinformation. Independent watchdog group the Real Facebook Oversight Board collaborated with environmentalist non-profit Stop Funding Heat to deliver an analysis of thousands of posts. The report also touches on the loopholes that make its rapid spread possible and calls for platform-wide reforms to combat the issue.

According to the report published on the Stop Funding Heat website, climate misinformation is shared and viewed thirteen times more often than fact-checked information. The research estimates that about 45,000 posts (across more than 195 accounts) downplay the climate crisis or outright deny it. These posts combined received between 818,000 and 1.36 million views, while reliable sources like Climate Science Center are overlooked.

Loopholes or vaguely worded policies

At the center of the issue is Facebook’s internal fact-checking policy. Researchers found that only 3.6% of misinformation ends up being checked by Facebook. Additionally, advertisements for fossil fuels that contain false information as well are left untouched. Researchers urge Facebook to go public about its internal findings on how climate misinformation spreads on their platform, as well as share how they define ‘misinformation’ specifically — considering the small margin of posts that seem to qualify as such. In addition to that, activists are asking that Facebook outright bans climate misinformation in paid advertisements and that they come up with a transparent plan to better deal with the rapid spread of false information.

‘Facebook can essentially, with the push of a button, determine which posts get more attention’

Brecht Castel, journalist and fact-checker with the Belgian magazine Knack, says this does not come as a surprise. ‘Facebook did too little, too late. Third party fact-checkers are far and few in between. Knack does about 20 checks a week for Facebook, but compared to the amount of posts on the site, that’s just scratching the surface.’

‘Everything is algorithm based’, says Castel. ‘Facebook can essentially, with the push of a button, determine which posts get more attention. Right now, posts that invoke a lot of emotion get boosted the most. Coincidentally, those tend to contain a lot of misinformation. More transparency on Facebook’s end would definitely be beneficial here.’

User vigilance

As for other solutions, Castel suggests educating users on basic fact-checking skills as a first step. ‘Teaching your readers to fact-check for themselves would be a good solution. The techniques that journalists use to determine if something is true or not are valuable tools to deal with false information. If everyone knows to be vigilant and takes the time to do a reverse image search, for example, misinformation would not spread as quickly.’

A blogpost by Google News Lab details five tips for easy fact-checking that can already help users get started.

Text: Leïlani Duroyaume, final edit: Marie-Julie Van de Sijpe

Collage: Leïlani Duroyaume